Under 1000 RU per second dummy data importer into Azure Cosmos DB for MongoDB

This post examines how to import data into Azure Cosmos DB for MongoDB while staying within the free throughput limit of 1000 Request Units per second.

It also showcases the use of Bicep templates, as well as the Bogus and ShellProgressBar C# libraries.

The source code is available on GitHub here.

Request Units Overview

Request Units (RUs) is a measure of the cost of operations in Azure Cosmos DB, standardised across all supported APIs (NoSQL, PostgreSQL, MongoDB, Apache Cassandra, Table, and Apache Gremlin).

Request Units per second (RU/s) is a measure of throughput, i.e. the amount of work that can be processed in a given time.

The documentation provides the following estimates:

- 1 RU for a point read of a 1 KB document

- 10 RUs for a point read of a 100 KB document

- 5.5 RUs to insert a 1 KB document without indexing

For example, inserting 20 MB of data with 1000 RU/s throughput takes approximately 2 minutes:

data_size * insert_RU_factor / throughput = (20 MB) * (6 RU / KB) / (1000 RU / s) = 2 min

The free tier of Azure Cosmos DB has a throughput limit of 1000 RU/s. Exceeding this limit normally results in 429 Too Many Requests errors. One way to avoid this is to enable the Server Side Retry option in the Azure Cosmos DB account settings.

Data Generation

One way to generate sample data is by using the Bogus library.

This commit sets up the Product model and its fake data generator.

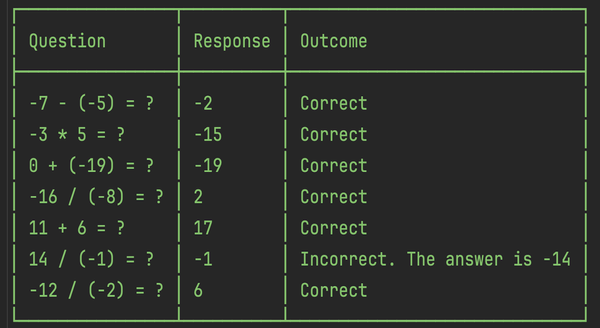

Below are the first five generated fake products. The random seed ensures that on re-running the code, all product properties, except for ProductId, remain the same.

{"ProductId":"a3542a50-8345-4a79-9be5-e3f4f02a65c4","Name":"Gorgeous Wooden Shoes","Description":"Expedita et ex rerum aut rerum doloremque dolore voluptatem.","Categories":["Clothing","Baby","Home","Kids"],"Price":702.09}

{"ProductId":"d11a9e73-e144-4592-8962-bb6b3984440b","Name":"Tasty Wooden Keyboard","Description":"Quibusdam ipsum autem nemo qui rerum.","Categories":["Clothing","Books"],"Price":991.88}

{"ProductId":"0a14b155-c4e9-4243-9fb5-921ac05b8995","Name":"Licensed Fresh Car","Description":"Cupiditate enim asperiores eligendi iure iusto cum.","Categories":["Health","Computers","Garden","Beauty"],"Price":170.26}

{"ProductId":"ec9b1609-d1ac-43ab-8075-ad66d1ae7801","Name":"Incredible Metal Shirt","Description":"Hic dicta aut corrupti.","Categories":["Movies","Music","Electronics"],"Price":312.59}

{"ProductId":"e748f88e-6767-4a64-9b1b-0207811d5093","Name":"Awesome Plastic Chips","Description":"Quibusdam eius aut dolor molestiae a ea est rerum quia.","Categories":["Garden","Movies","Health"],"Price":360.86}

Azure Infrastructure Setup

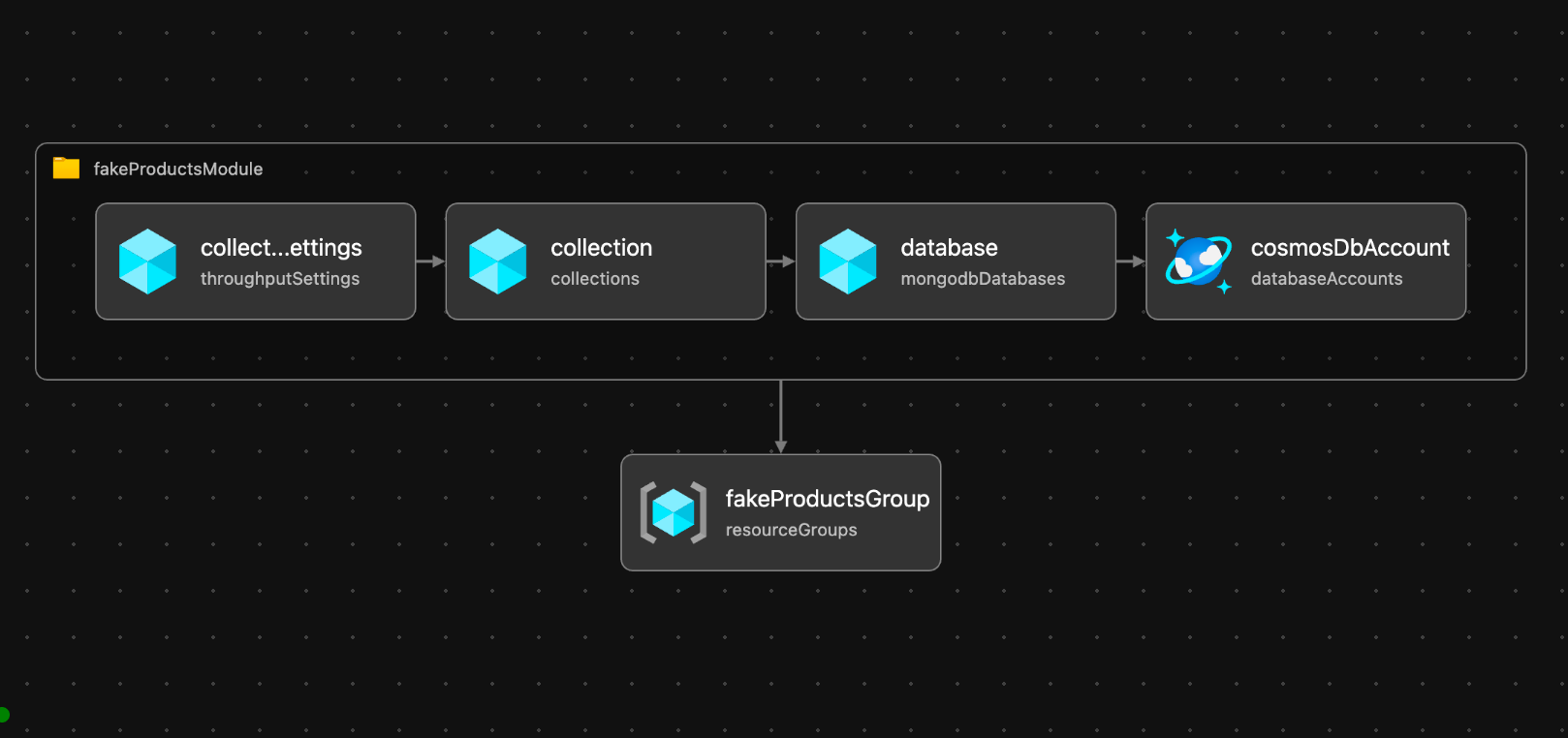

A reliable way to ensure consistent infrastructure setup is by using deployment templates. This commit prepares such a template. It creates a resource group containing an Azure Cosmos DB account. The account has a database, which has a collection with specific settings.

The template can be deployed by setting the value of $location and running the following command:

az deployment sub create --template-file main.bicep --location $location

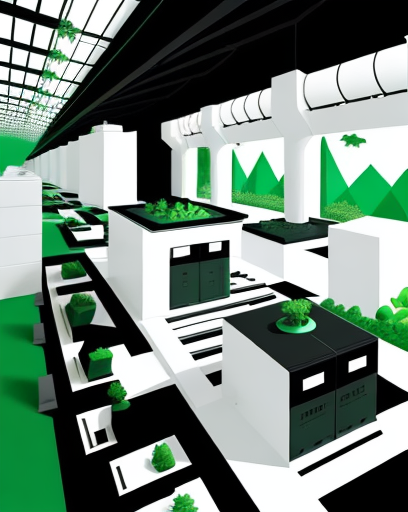

Here is the infrastructure diagram taken from the VS Code Bicep Visualiser:

Note the DisableRateLimitingResponses setting on the account, which enables the Server Side Retry option.

Also, note the enableFreeTier: true setting and the throughput limit of 1000 on the account. These settings ensure that Cosmos DB remains free.

Lastly, note the throughput limit of 1000 on the collection. The default is 400, which is OK to increase because there are no other collections to share the throughput with.

Data Upload

The first commit adds configurations and establishes a connection to MongoDB. The database and collection names are stored in appsettings.json, while the connection string is stored as a user secret.

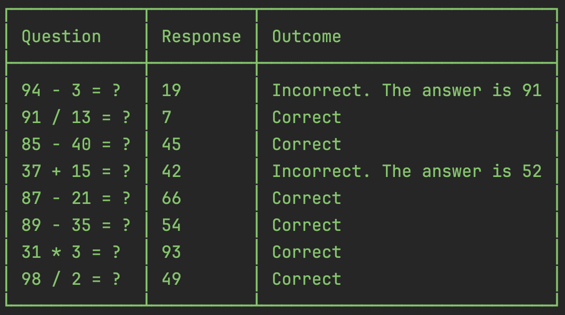

The second commit implements the data upload logic. The implementation inserts data in batches, allowing progress to be tracked using the ShellProgressBar library:

Conclusion

This post demonstrated an example on how to import data into Azure Cosmos DB for MongoDB while staying within the free throughput limits.